- Automatically maintains application performance based on the user requirement at the lowest possible price

- Service which helps user to monitor applications and automatically adjusts capacity to maintain steady, predictable performance at the lowest possible cost

- Benefits:

- better fault tolerance

- better cost management

- reliability of your service

- scalability

- flexibility - changes can be made on the fly

- Snapshot vs AMI

- Snapshot

- used as a backup of a single EBS volume attached to the EC2 instance

- opt for it when the instance contains multiple static EBS volumes

- pay only for the storage of the modified data

- a non-bootable image on EBS volume

- AMI

- used as a backup of an EC2 instance

- widely used to replace a failed EC2 instance

- pay only for the storage that you use

- bootable image on EC2 instance

- creating an AMI image will also create EBS snapshots

How does AWS auto scaling work?

- Configure single unified scaling policy per application source

- explore the application

- choose the service you want to scale

- select what to optimize e.g. cost or performance

- keep track of scaling

- scaling plan helps user to configure a set of instructions for scaling based on software requirement

Launch Template

How to set up a Launch Template?

EC2 >> Instances >> Launch Template:

We can set:

- Name

- Version description

- AMI. We can create AMI for each version of our application an name them e.g. amy-my-app-v1, ami-my-app-v2 etc...In the same way we can create new version of launch template and bind desired AMI (application) version to it.

- Instance type e.g. t2.micro

- Key pair (for secure connection to the instance)

- Network settings

- Launch into:

- VPC

- Shared network

- Security group

- Storage (volumes e.g. Volume 1(AMI Root, 8GB, EBS, General Purpose SSD))

- Resource tags

- Network interfaces

- User data

User data example for setting an environment variable in our application's env config file:

Shebang arguments explained:

-e Exit immediately if a command exits with a non-zero status.

-x Print commands and their arguments as they are executed.

Launch templates are versioned but we can't manually set the version number. AWS does it automatically by incrementing numbers from version 1.

Launch templates can be used outside Auto-scaling or Load Balancing: whenever we want to launch the instance, we don't need to manually fill details about the new instance, we can just use Launch instance from template.

In our scenario though, we want auto-scaling group to use launch template in order to launch EC2 instances.

Auto scaling group

Auto scaling group manages how many EC2 instances will be running in parallel.

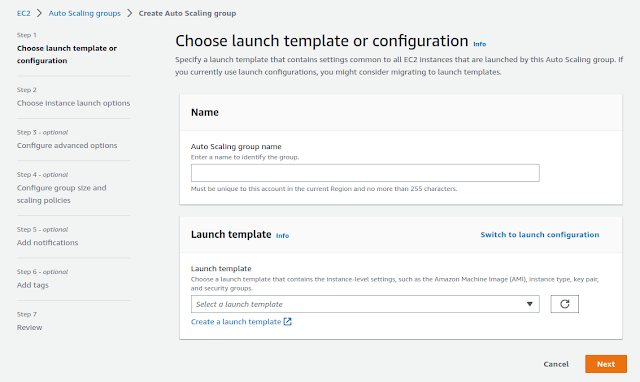

How to set up an Auto scaling group?

EC2 >> Auto scaling groups >> Create Auto scaling group

- Choose launch template or configuration

- Auto scaling group name

- Launch template. We can select it and also select:

- Launch template version (we can use this to select the version of our application that we want to be running on these new instances)

- Choose instance launch options

- Network

- VPC

- Availability Zones and subnets (in the form az_name|subnet_name); We want to list here all of them so we have a large pool of AZs in case something happens to EC2 instances running in some of them.

- Instance type requirements - we can override launch template here

- Configure advanced options

- Load balancing (optional)

- No Load Balancer - traffic to auto scaling group will not be fronted by a load balancer

- Attach to an existing load balancer

- We'll specify here target groups actually (not load balancer directly)

- Attach to a new load balancer - quickly create a basic load balancer

- Health checks (optional)

- Health Check type

- EC2 - always enabled

- ELB - if load balancing is enabled

- Health check grace period - amount of time until EC2 auto scaling performs the first health check on new instances after they are put into service e.g. 300 seconds

- Additional settings (optional)

- Monitoring - enable group metrics collection withing CloudWatch

- Configure group size and scaling policies

- Group size

- Desired capacity. How many EC2 instances do we want running simultaneously? Must be between minimum and maximum. E.g. 2

- Minimum capacity e.g. 2

- Maximum capacity e.g. 8

- Scaling policies - choose whether to use a scaling policy to dynamically resize auto scaling group to meet changes in demand

- Target tracking scaling policy - choose a desired outcome and leave it to the scaling policy to add and remove capacity as needed to achieve that outcome

- Name - we can chose an arbitrary name

- Metric type e.g. Average CPU utilization (average over all EC2 instances running)

- Target value e.g. 50 (%)

- Instances need ____ seconds warm up before including in metric

- Disable scale in to create only a scale-out policy

- None

- Instance scale-in protection (optional); if enabled, newly created instances will be protected from scale-in by default

- Add notifications - send notifications to SNS topics whenever Amazon EC2 auto-scaling launches or terminates the EC2 instances in your auto scaling group

- Add tags

- Review

As soon as auto scaling group is created it becomes active. If we set to have 2 as a desired number of instances, auto scaling group will immediately create them. If we try to delete them (e.g. manually stop them), they will get to Terminated state and auto scaling group will immediately re-launch 2 new instances. If we want permanently to stop these instances we need first to delete auto scaling group and then delete those EC2 instances.

As seen above, Load balancing is optional so auto scaling (using auto scaling groups) can all happen with NO Load Balancer involved. But typically, we want to have load balancer so the load is equally distributed across all running EC2 instances. We can set up NGINX server as load balancer or use AWS (Application) Load Balancer in which case, in Load balancing options above, we'll choose to attach auto scaling group to an existing or newly created Load Balancer. It's worth mentioning that auto-scaling group is associated to load balancer indirectly, via target groups.

Use an instance refresh to update instances in an Auto Scaling group - Amazon EC2 Auto Scaling

Resources:

App Scaling - AWS Application Auto Scaling - AWS

Set capacity limits on your Auto Scaling group - Amazon EC2 Auto Scaling

No comments:

Post a Comment