Containers use the same DNS servers as the host by default, but you can override this with --dns.By default, containers inherit the DNS settings as defined in the /etc/resolv.conf configuration file. Containers that attach to the default bridge network receive a copy of this file. Containers that attach to a custom network use Docker's embedded DNS server. The embedded DNS server forwards external DNS lookups to the DNS servers configured on the host.

Friday, 28 June 2024

How to fix Docker container not resolving domain names

Monday, 27 May 2024

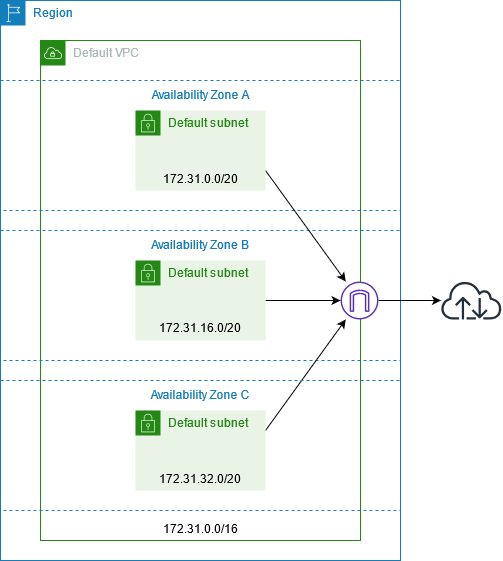

AWS Virtual Private Cloud (VPC)

A VPC is an isolated portion of the AWS Cloud populated by AWS objects, such as Amazon EC2 instances.

- public subnet in each Availability Zone

- internet gateway

- settings to enable DNS resolution.

|

| source: https://docs.aws.amazon.com/vpc/latest/userguide/default-vpc.html |

- 5 VPCs per region

- 200 subnets per VPC

Default VPC

|

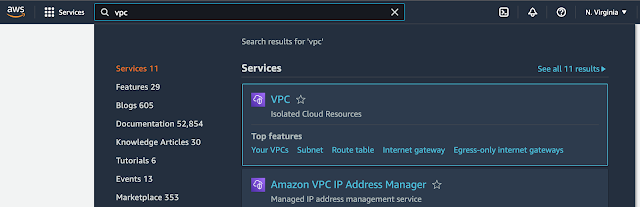

| Finding a VPC service in AWS Console |

|

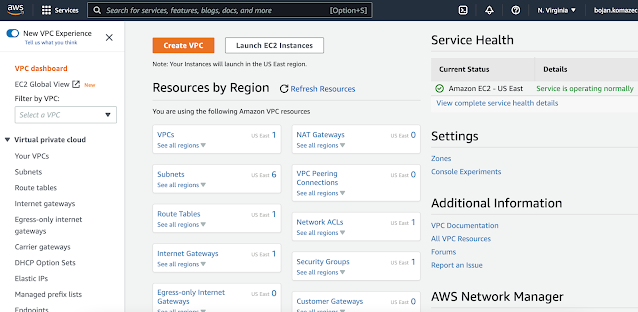

| VPC Service Dashboard |

|

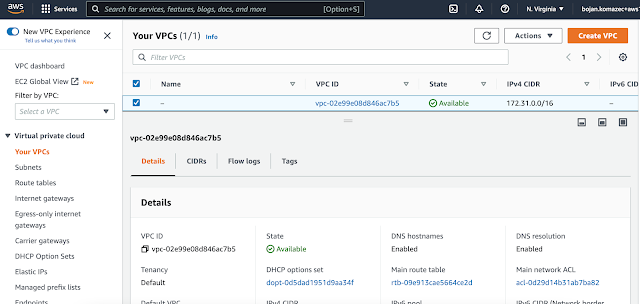

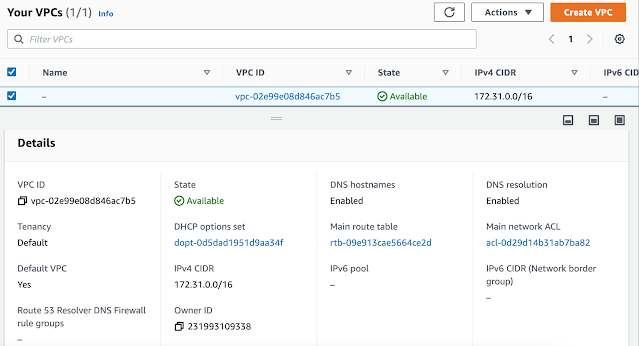

| Default VPC is listed |

|

| Default VPC details |

Creating a VPC

You must specify an IPv4 address range when you create a VPC. Specify the IPv4 address range as a Classless Inter-Domain Routing (CIDR) block; for example, 10.0.0.0/16. You cannot specify an IPv4 CIDR block larger than /16. Optionally, you can also associate an IPv6 CIDR block with the VPC.

- ranges that we might use in other regions

- ranges that we might use in future with on-premises or co-location

- anticipation how big our network needs to be - we don't want to create a VPC with allocated thousands of IP addresses if we'll have several hundreds machines in it. In the same way, we don't want to allocate too few IP addresses as if we want to scale e.g. EC2 instances and load balancers in VPC network, we won't be able to launch new EC2 instances as there won't be enough IP addresses.

Subnets

- ID

- ARN

- State e.g. Available

- CIDR e.g. 172.31.16.0/20

- Number of Available IPv4 addresses (this depends on CIDR mask e.g. if it's 20 number of available addresses is 2^(32-20) = 2^12 = 4096)

- IPv6 CIDR

- Availability Zone e.g. eu-west-2a (Subnets are AZ-specific!)

- VPC that it belongs to

- Route table

- Network ACL

- Default subnet (Yes/No)

- Auto-assign public IPv4 address (Yes/No). Enable AWS to automatically assign a public IPv4 or IPv6 address to a new primary network interface for an (EC2) instance in this subnet.

- By default, nondefault subnets have the IPv4 public addressing attribute set to false, and default subnets have this attribute set to true. An exception is a nondefault subnet created by the Amazon EC2 launch instance wizard — the wizard sets the attribute to true.

- Auto-assign IPv6 address

- Auto-assign customer-owned IPv4 address

- IPv6-only (Yes/No)

- Hostname type e.g. IP name

- Resource name DNS A record (Enabled/Disabled)

- Resource name DNS AAAA record (Enabled/Disabled)

- DNS64 (Enabled/Disabled)

- Owner (account ID)

Creating Subnets

- public for load balancers

- private for container clusters

|

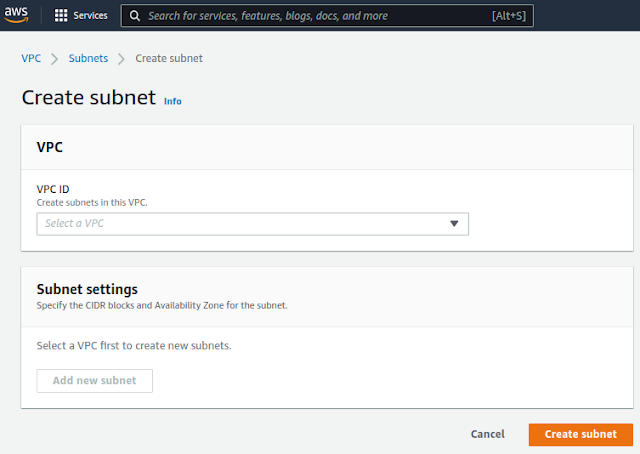

| Default view of the Create subnet dialog |

- Name: public-b

- AZ: eu-west-1b

- CIDR block: 10.10.1.0/24 (if we want to choose the block that is following the previously chosen 10.10.0.0/24)

- Name: public-c

- AZ: eu-west-1c

- CIDR block: 10.10.2.0/24 (if we want to choose the block that is following the previously chosen 10.10.1.0/24)

- Name: private-cluster-[a|b|c]

- AZ: eu-west-1[a|b|c]

- CIDR block: 10.10.[3|4|5].0/24 (if we want to choose the block that is following the previously chosen; we use /24 assuming that 251 IP address will be enough for cluster scaling)

Routing

VPC has an implicit router, and you use route tables to control where network traffic is directed. Each subnet in your VPC must be associated with a route table, which controls the routing for the subnet (subnet route table). You can explicitly associate a subnet with a particular route table. Otherwise, the subnet is implicitly associated with the main route table. A subnet can only be associated with one route table at a time, but you can associate multiple subnets with the same subnet route table.

(source: Configure route tables - Amazon Virtual Private Cloud)

Route tableA route table contains a set of rules, called routes, that are used to determine where network traffic from your subnet or gateway is directed.

Enabling Internet Access in VPC

In the above example "local" means the VPC router will send traffic in that cidr range to the local VPC. Specifically, it will send the traffic to the specific network interface that has the IP address specified and drop the packet if nothing in your VPC has that IP address.

Also worth noting, is the local rule can't be overridden. The VPC router will ALWAYS route local VPC traffic to the VPC (and specifically route directly to the correct interface without letting anything else in the VPC have the ability to sniff it). That rule is provided mostly as a For-your-awareness rule.

Internet Gateway

An internet gateway is a horizontally scaled, redundant, and highly available VPC component that enables communication between your VPC and the internet.To use an internet gateway, attach it to your VPC and specify it as a target in your subnet route table for internet-routable IPv4 or IPv6 traffic. An internet gateway performs network address translation (NAT) for instances that have been assigned public IPv4 addresses.

An internet gateway enables resources in your public subnets (such as EC2 instances) to connect to the internet if the resource has a public IPv4 address or an IPv6 address. Similarly, resources on the internet can initiate a connection to resources in your subnet using the public IPv4 address or IPv6 address. For example, an internet gateway enables you to connect to an EC2 instance in AWS using your local computer.

An internet gateway provides a target in your VPC route tables for internet-routable traffic. For communication using IPv4, the internet gateway also performs network address translation (NAT).

NAT Gateway

You can use a network address translation (NAT) gateway to enable instances in a private subnet to connect to services outside your VPC but prevent such external services from initiating a connection with those instances. There are two types of NAT gateways: public and private.A public NAT gateway enables instances in private subnets to connect to the internet but prevents them from receiving unsolicited inbound connections from the internet. You should associate an elastic IP address with a public NAT gateway and attach an internet gateway to the VPC containing it.A private NAT gateway enables instances in private subnets to connect to other VPCs or your on-premises network but prevents any unsolicited inbound connections from outside your VPC. You can route traffic from the NAT gateway through a transit gateway or a virtual private gateway. Private NAT gateway traffic can't reach the internet.

- Name

- Subnet in which it will be created (NAT gateway is subnet-specific)

- Connectivity type:

- Public

- Elastic IP allocation ID - we need to assign an Elastic IP address to the NAT gateway. BK: Elastic IP (public IP) must be attached to the Public NAT as subnet's property Auto-assign public IPv4 address applies only to EC2 instances in that subnet so even if it's set to true, NAT won't be assigned a public IP address.

- Private

- Tags

Elastic IP allocation IDChoose an Elastic IP address to assign to your NAT gateway. Only Elastic IP addresses that are not associated with any resources are listed. By default, you can have up to 2 Elastic IP addresses per public NAT gateway. You can increase the limit by requesting a quota adjustment.To use an Elastic IP address that is currently associated with another resource, you must first disassociate the address from the resource. Otherwise, if you do not have any Elastic IP addresses you can use, allocate one to your account.When you assign an EIP to a public NAT gateway, the network border group of the EIP must match the network border group of the Availability Zone (AZ) that you're launching the public NAT gateway into. If it's not the same, the NAT gateway will fail to launch. You can see the network border group for the subnet's AZ by viewing the details of the subnet. Similarly, you can view the network border group of an EIP by viewing the details of the EIP address.

References:

Friday, 10 May 2024

Introduction to Kubernetes Networking

This article extends my notes from an Udemy course "Kubernetes for the Absolute Beginners - Hands-on". All course content rights belong to course creators.

The previous article in the series was Kubernetes Deployments | My Public Notepad.

Networking within a single node

Cluster Networking (multiple nodes)

- All the containers or pods in a Kubernetes cluster must be able to communicate with one another without having to configure NAT

- All nodes must be able to communicate with containers and all containers must be able to communicate with the nodes in the cluster without NAT

- Cisco ACI Networks [Cisco ACI - Application Centric Infrastructure - Cisco]

- Cilium [Cilium - Cloud Native, eBPF-based Networking, Observability, and Security]

- Big Cloud Fabric [Big Cloud Fabric - Red Hat Ecosystem Catalog]

- Flannel [flannel-io/flannel: flannel is a network fabric for containers, designed for Kubernetes]

- VMware [VMware Networking Solutions]

- Zest

- Calico [About Calico | Calico Documentation]

- Weave Net [weaveworks/weave: Simple, resilient multi-host containers networking and more.]

Monday, 27 February 2023

AWS NAT Gateway

What is NAT?

From AWS documentation:

A Network Address Translation (NAT) gateway is a device that forwards traffic from private subnets to other networks.

There are two types of NAT gateways:

- Public: Instances in private subnets can connect to the internet but cannot receive unsolicited inbound connections from the internet.

- Private: Instances in private subnets can connect to other VPCs or your on-premises network.

Each private or public NAT gateway must have a private IPv4 address assigned to it. Each public NAT gateway must also have an elastic IP (EIP) address (which is static public address associated with your AWS account) associated with it. Choosing a private IPv4 address is optional. If you don't choose a private IPv4 address, one will be automatically assigned to your NAT gateway at random from the subnet that your NAT gateway is in. You can configure a custom private IPv4 address in Additional settings.

After you create the NAT gateway, you must update the route table that’s associated with the subnet you chose for the NAT gateway. If you create a public NAT gateway, you must add a route to the route table that directs traffic destined for the internet to the NAT gateway. If you create a private NAT gateway, you must add a route to the route table that directs traffic destined for another VPC or your on-premises network to the NAT gateway.

When to use NAT?

From AWS documentation:

The instances in the public subnet can send outbound traffic directly to the internet, whereas the instances in the private subnet can't. Instead, the instances in the private subnet can access the internet by using a network address translation (NAT) gateway that resides in the public subnet. The database servers can connect to the internet for software updates using the NAT gateway, but the internet cannot establish connections to the database servers.

Note that NAT is required if instances in private subnet need to send a request (initiate a new connection) to the host in Internet. If request has reached private instance (via Application Load Balancer for example), then NAT is not required. See: amazon web services - Can a EC2 in the private subnet sends traffic to the internet through ELB without using NAT gateway/instance? - Server Fault

How to create NAT?

Private NAT gateway traffic can't reach the internet.

When assigning private IPv4 addresses to a NAT gateway, choose how you want to assign them:

- Auto-assign: AWS automatically chooses a primary private IPv4 address and you choose if you want AWS to assign up to 7 secondary private IPv4 addresses to assign to the NAT gateway. AWS automatically chooses and assigns them for you at random from the subnet that your NAT gateway is in.

- Custom: Choose the primary private IPv4 address and up to 7 secondary private IPv4 addresses to assign to the NAT gateway.

You can assign up to 8 private IPv4 addresses to your private NAT gateway. The first IPv4 address that you assign will be the primary IPv4 address, and any additional addresses will be considered secondary IPv4 addresses. Choosing private IPv4 addresses is optional. If you don't choose a private IPv4 address, one will be automatically assigned to your NAT gateway. You can configure custom private IPv4 addresses in Additional settings.

Secondary IPv4 addresses are optional and should be assigned or allocated when your workloads that use a NAT gateway exceed 55,000 concurrent connections to a single destination (the same destination IP, destination port, and protocol). Secondary IPv4 addresses increase the number of available ports, and therefore they increase the limit on the number of concurrent connections that your workloads can establish using a NAT gateway.

You can use the NAT gateway CloudWatch metrics ErrorPortAllocation and PacketsDropCount to determine if your NAT gateway is generating port allocation errors or dropping packets. To resolve this issue, add secondary IPv4 addresses to your NAT gateway.You can assign up to 8 private IPv4 addresses to your private NAT gateway. The first IPv4 address that you assign will be the primary IPv4 address, and any additional addresses will be considered secondary IPv4 addresses. Choosing private IPv4 addresses is optional. If you don't choose a private IPv4 address, one will be automatically assigned to your NAT gateway. You can configure custom private IPv4 addresses in Additional settings.Secondary IPv4 addresses are optional and should be assigned or allocated when your workloads that use a NAT gateway exceed 55,000 concurrent connections to a single destination (the same destination IP, destination port, and protocol). Secondary IPv4 addresses increase the number of available ports, and therefore they increase the limit on the number of concurrent connections that your workloads can establish using a NAT gateway.